Efficient LLMs and RL&Agentic Systems

Residual Context Diffusion Language Models

Yuezhou Hu*, Harman Singh*, Monishwaran Maheswaran*, Haocheng Xi, Coleman Richard Charles Hooper, Jintao Zhang, Aditya Tomar, Michael W. Mahoney, Sewon Min, Mehrdad Farajtabar, Kurt Keutzer, Amir Gholami, Chenfeng Xu [Website]

When RL Meets Adaptive Speculative Training: A Unified Training-Serving System

Junxiong Wang*†, Fengxiang Bie*†, Jisen Li†, Zhongzhu Zhou†, Zelei Shao†, Yubo Wang†,Yinghui Liu†, Qingyang Wu, Avner May, Sri Yanamandra, Yineng Zhang, Ce Zhang, Tri Dao,Percy Liang, Ben Athiwaratkun, Shuaiwen Leon Song, Chenfeng Xu† (co-lead), Xiaoxia Wu† [Website]

Aurora is the first day-0 support speculative system! You can accelerate your LLM since today! 😎

Beat the long tail: Distribution-Aware Speculative Decoding for RL Training

Zelei Shao, Vikranth Srivatsa, Sanjana Srivastava, Qingyang Wu, Alpay Ariyak, Xiaoxia Wu, Ameen Patel, Jue WANG, Percy Liang, Tri Dao, Ce Zhang, Yiying Zhang, Ben Athiwaratkun, Chenfeng Xu, Junxiong Wang [MLSys 2026]

Dobi-SVD: Differentiable SVD for LLM Compression and Some New Perspectives

Wang Qinsi*, Jinghan Ke*, Masayoshi Tomizuka, Kurt Keutzer, Chenfeng Xu [ICLR 2025]

ThunderAgent: A Fast, Simple, and Robust Program-Aware Agentic Inference System

Hao Kang*, Ziyang Li*, Xinyu Yang*, Weili Xu, Yinfang Chen, Junxiong Wang, Beidi Chen, Tushar Krishna, Chenfeng Xu, Simran Arora [website]

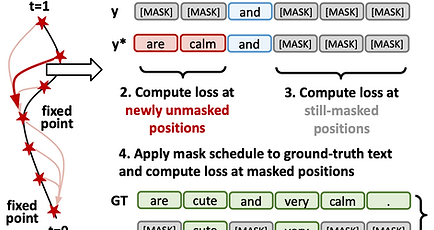

CDLM: Consistency Diffusion Language Models For Faster Sampling

Minseo Kim, Chenfeng Xu, Coleman Hooper, Harman Singh, Ben Athiwaratkun, Ce Zhang, Kurt Keutzer, Amir Gholami [MLSys 2026]

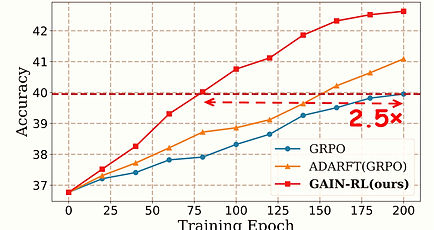

Angles Don’t Lie: Unlocking Training-Efficient RL Through the Model’s Own Signals

Qinsi Wang*, Jinghan Ke*, Hancheng Ye, Yueqian Lin, Yuzhe Fu, Jianyi Zhang, Kurt Keutzer, Chenfeng Xu†, Yiran Chen† [Neurips 2025 Spotlight]